1. 运行

较新的toolchain会使用2.38或更高版本的binutil,在编译较低版本xviosr时会有问题,面对csr指令报Error: unrecognized opcode错误或者'zifencei'相关错误。

Compilation

Issue · Issue #142 · xvisor/xvisor · GitHub

Invalid or

unknown z ISA extension: 'zifencei' · Issue #150 · xvisor/xvisor ·

GitHub

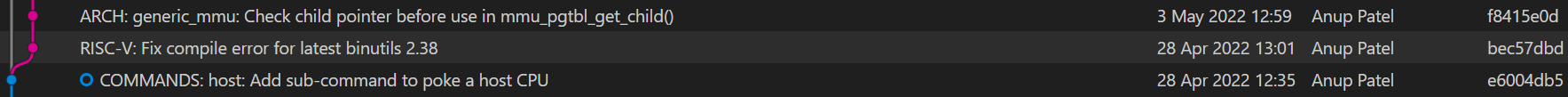

此问题在2022.04.28的某个提交被修复。

binutil fix

在编好更低版本的toolchain后尝试用其编译v0.3.1版本,基本还是跟着xvisor的riscv64-qemu.txt文档走。

Exploring

virtualization in RISC-V machines - embeddedinn

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 cd <xvisor repo>nproc )cd <opensbi_source_directory>cd <linux_source_directory>cp arch /riscv/configs/defconfig arch /riscv/configs/tmp-virt64_defconfigarch /riscv/configs/tmp-virt64_defconfig -f <xvisor_source_directory>/tests/riscv/virt64/linux/linux_extra.configmkdir build_riscvnproc ) O=./build_riscv/ ARCH=riscv Image dtbstrue -M virt -m 512M -nographic -bios /codes/opensbi/build/platform/generic/firmware/fw_jump.bin -kernel ./build/vmm.bin -initrd ./build/disk.img -append 'vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' 'vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' 'vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' 'vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' -drive file=/arceos_2024S/arceos/apps/hv/guest/linux/rootfs.img,if =none,id =drive0 -device virtio-blk-device,drive=drive0,id =virtioblk0'root=/dev/vda vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' -drive file=/arceos_2024S/arceos/apps/hv/guest/linux/rootfs.img,if =none,id =drive0 -device virtio-blk-device,drive=drive0,id =virtioblk0"root=/dev/vda rw console=ttyS0,115200 earlycon=uart8250,mmio,0x10000000" 'root=/dev/vda vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' -drive file=/arceos_2024S/arceos/apps/hv/guest/linux/rootfs.img,if =none,id =drive0 -device virtio-blk-device,drive=drive0,id =virtioblk0 -s -S'root=/dev/vda vmm.bootcmd="vfs mount initrd /;vfs run /boot.xscript;vfs cat /system/banner.txt"' -drive file=/arceos_2024S/arceos/apps/hv/guest/linux/rootfs.img,if =none,id =drive0 -device virtio-blk-device,drive=drive0,id =virtioblk0

2. 爆炸

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 [guest0/uart0] [ 0.000000] riscv-intc: 64 local interrupts mapped

使用2022.04.28的一个版本出了问题,在issue找到了相关内容:

riscv: run

linux in xvisor, occur fault on wirte plic reg · Issue #146 ·

xvisor/xvisor · GitHub

这是2022.09发布的,应该会在之后的版本修复。

里面提到更新到xvisor-next里了,我找了找这个仓库9月份的提交,找到了相应commit:

EMULATORS:

plic: Fix number of irq lines · avpatel/xvisor-next@e475b1a ·

GitHub

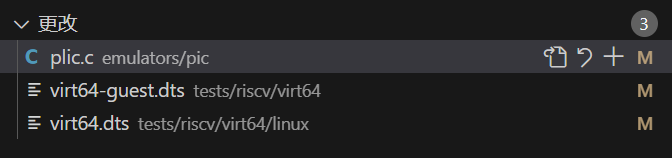

好像是中断号的问题,我直接在当前版本上修改了,修改后可以运行。

patch

x. 复活

会因无法读取/dev/ram设备启动失败。

找了半天发现guest的启动参数默认在arch_board.c里写死了

root=/dev/ram rw console=ttyS0,115200 earlycon=uart8250,mmio,0x10000000

需要在vserial绑定后,用linux_cmdline命令修改。

配合vdisk attach,可以用另一个磁盘镜像启动:

https://github.com/xvisor/xvisor/issues/168

nice的

3. vscode debug

经典

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 { "version" : "0.2.0" , "configurations" : [ { "name" : "qemu connect" , "type" : "cppdbg" , "request" : "launch" , "program" : "${workspaceFolder}/build/vmm.elf" , "args" : [ ] , "stopAtEntry" : false , "cwd" : "${fileDirname}" , "environment" : [ ] , "externalConsole" : false , "MIMode" : "gdb" , "setupCommands" : [ { "description" : "为 gdb 启用整齐打印" , "text" : "-enable-pretty-printing" , "ignoreFailures" : false } , { "description" : "告别Cannot access memory" , "text" : "set riscv use-compressed-breakpoints yes" , "ignoreFailures" : false } ] , "miDebuggerPath" : "riscv64-unknown-linux-gnu-gdb" , "miDebuggerServerAddress" : "localhost:1234" , } ] }

1 2 3 4 5 6 7 &per_cpu(chpstate, cpu)virtual_addr_t )(&percpu_chpstate)) + __percpu_offset[cpu]}))

4. PLIC

vcpu尝试访问设备后会触发STORE_GUEST_PAGE_FAULT,根据对应地址等信息执行cpu_vcpu_emulate_store/load,以及相应vmm_devemu_emulate_write/read。

查找地址所在的vmm_region对应哪个模拟设备,取此设备的读写操作。

xvisor似乎对所有设备采用模拟操作?包括串口。在linux启动过程中对串口进行设置时,虚拟串口设备会从自己所属的guest中找到对应chip的处理函数,即plic_irq_handle,给guest产生一个虚拟中断。

大致流程为:

guest读写plic设置使能/优先级等,因PAGE_FAULT被拦截,并被host设置到虚拟plic中。

guest读写设备,因PAGE_FAULT被拦截,host使用虚拟设备进行对应操作,并在某些情况由虚拟设备引起一个“软件层面”的中断。

这个引起中断的操作,实际上导向给虚拟PLIC设置pending,模拟了现实中物理设备和PLIC的交互。

改变虚拟PLIC的状态如pending后,可能会执行__plic_context_irq_update,其中会依据虚拟PLIC中使能/优先级等配置,找出当前虚拟PLIC是否有可以发出的中断,若存在,给vcpu

assert一个中断,实际上是设置了vcpu结构体中irq相关信息。

vmm_manager_vcpu_hcpu_func和vcpu_irq_wfi_resume没太看懂,似乎和调度有关。处理完plic_irq_handle后一路返回到do_handle_trap中,此函数最后的。vmm_scheduler_irq_exit中,会根据当前vcpu的irq信息,设置相应中断注入。例如若之前给vcpu

assert了中断,那么此处通过查找vcpu->irqs.irq[irq_no].assert就会得知,并设置hvip。

_handle_hyp_exception返回后回到guest,若hvip被设置则在guest中触发中断。

problem:

vcpu_irq_wfi_resume相关。

若在二阶段页表中设置对设备的等值映射,相当于vm能够直接控制设备?

5. wfi & ipi

xvisor的设计中,wfi被设置为不可在虚拟机中直接执行。

当hstatus.VTW=1 and mstatus.TW=0时,在vs

mode下执行wfi时,若未在特定时间(取决于实现,可能为0)内完成,会触发虚拟指令异常。

此时若vcpu执行wfi,会退出虚拟机。vmm便可标记此vcpu处于wfi状态,并将其置于不可运行状态并调度走。

当给vcpu assert

irq时,若此vcpu处于wfi状态,便可清除其wfi标记,并将其重新置于可运行状态。

当中断被首次assert到vcpu上后,会执行

1 2 3 vmm_manager_vcpu_hcpu_func (vcpu,

其中会查看vcpu是否处于可中断状态,并获取此vcpu所在的物理cpu编号,形成cpu_mask

1 2 3 if (arch_atomic_read (&vcpu-> state) & state_mask) {vmm_cpumask_of (vcpu-> hcpu);

最终会调用

1 2 3 4 5 6 7 vmm_smp_ipi_sync_call (cpu_mask, 0 ,vmm_smp_ipi_sync_call (cpu_mask, 0 ,

其中有逻辑:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 for_each_cpu(c, dest) {if (c == cpu) {func (arg0, arg1, arg2); else {if (!vmm_cpu_online (c)) { continue ;smp_ipi_sync_submit (&per_cpu (ictl, c), &ipic);vmm_cpumask_set_cpu (c, &trig_mask);

遍历目标cpu_mask中标记的每个cpu,若此cpu就是当前物理cpu,则直接执行func,即由manager_vcpu_hcpu_func包装的vcpu_irq_wfi_resume。

若标记中的cpu不是当前物理cpu,则执行smp_ipi_sync_submit。

其中会将ipi信息入队到目标物理cpu的队列中,并调用arch_smp_ipi_trigger,来引发目标物理cpu上的ipi:

同步ipi下,向其他核心发送ipi后,可能还需要在有限时间内,检测目标核心们的ipi队列是否为空(目标核心是否已经处理完所有ipi函数了),根据情况来返回是否超时。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 while (!fifo_enqueue (ictlp-> sync_fifo, ipic, FALSE) && try ) {arch_smp_ipi_trigger (vmm_cpumask_of (ipic-> dst_cpu));vmm_udelay (SMP_IPI_WAIT_UDELAY);try --;arch_smp_ipi_trigger (const struct vmm_cpumask *dest)if (smp_ipi_available) {vmm_host_irq_raise (smp_ipi_irq, dest);vmm_host_irq_raise (u32 hirq, const struct vmm_cpumask *dest) {struct vmm_host_irq *irq;if (NULL == (irq = vmm_host_irq_get (hirq)))return VMM_ENOTAVAIL;if (irq-> chip && irq-> chip-> irq_raise) {-> chip-> irq_raise (irq, dest);return VMM_OK;

其中irq_raise定义于drivers/irqchip/irq-riscv-aclint-swi.c中:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 static struct vmm_host_irq_chip aclint_swi_irqchip = {"riscv-aclint-swi" ,static void aclint_swi_raise (struct vmm_host_irq *d,const struct vmm_cpumask *mask) {u32 cpu;per_cpu (aclint_swi_reg, cpu);vmm_writel (1 , swi_reg);

可见其会向aclint_swi_reg写入1。

而aclint_swi_reg在aclint_swi_init()被初始化:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 vmm_devtree_request_regmap (node, &va, 0 , "RISC-V ACLINT SWI" );vmm_smp_map_hwid (cpu, &thart_id);if (thart_id != hart_id) {continue ;per_cpu (aclint_swi_reg, cpu) =sizeof (u32 ) * i);break ;

其会建立aclint的物理地址与虚拟地址va间的映射,然后每个hart的软件中断寄存器拥有4B偏移,与aclint布局对应,详见aclint手册。

当目标hart收到ipi后,进入软件中断处理,执行到smp_ipi_handler:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 static vmm_irq_return_t smp_ipi_handler (int irq_no, void *dev) {vmm_smp_ipi_exec ();return VMM_IRQ_HANDLED;vmm_smp_ipi_exec (void) {struct smp_ipi_call ipic;struct smp_ipi_ctrl *ictlp = &this_cpu (ictl);while (fifo_dequeue (ictlp-> sync_fifo, &ipic)) {if (ipic.func) {func (ipic.arg0, ipic.arg1, ipic.arg2);if (!fifo_isempty (ictlp-> async_fifo)) {vmm_completion_complete (&ictlp-> async_avail);

vmm_smp_ipi_exec()中会从当前物理cpu的队列中出队之前的ipi信息,并执行其中的函数,即由manager_vcpu_hcpu_func包装的vcpu_irq_wfi_resume。

vcpu_irq_wfi_resume(vcpu, ...)会清除vcpu的wfi状态,并停止wfi超时事件,并将vcpu置于Ready状态,可以进行调度运行。

还有异步ipi方案

vmm_main.c中为异步ipi初始化:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 #if defined(CONFIG_SMP) "asynchronus inter-processor interrupts\n" );if (ret) {goto init_bootcpu_fail;#endif static struct vmm_cpuhp_notify smp_async_ipi_cpuhp ="SMP_ASYNC_IPI" ,int __init vmm_smp_async_ipi_init (void ) {return vmm_cpuhp_register(&smp_async_ipi_cpuhp, TRUE);

其中

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 int vmm_cpuhp_register (struct vmm_cpuhp_notify *cpuhp, bool invoke_startup) {if (cpu == curr_cpu)continue ;if (cpuhp->state <= chps->state) {NULL , NULL );static void cpuhp_register_sync (void *arg1, void *arg2, void *arg3) {struct vmm_cpuhp_notify *cpuhp =struct cpuhp_state *chps =if (cpuhp->startup && (cpuhp->state <= chps->state))

smp_async_ipi_startup会为当前cpu创建一个orphan_vcpu专门执行smp_ipi_main。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 static int smp_async_ipi_startup (struct vmm_cpuhp_notify *cpuhp, u32 cpu) {int rc = VMM_EFAIL;char vcpu_name[VMM_FIELD_NAME_SIZE];struct smp_ipi_ctrl *ictlp =sizeof (vcpu_name), "ipi/%d" , cpu);virtual_addr_t )&smp_ipi_main,if (!ictlp->async_vcpu) {goto fail;if ((rc = vmm_manager_vcpu_kick(ictlp->async_vcpu))) {goto fail_free_vcpu;return VMM_OK;return rc;

smp_ipi_main()是一个类似生产者-消费者的处理结构。它不断尝试取出当前物理cpu队列中的ipi信息并执行

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 static void smp_ipi_main (void ) {struct smp_ipi_call ipic ;struct smp_ipi_ctrl *ictlp =while (1 ) {while (fifo_dequeue(ictlp->async_fifo, &ipic)) {if (ipic.func) {

6. 外部中断

以串口为例。

xvisor专门分配了一个vcpu给xvisor控制台mterm。

当键盘触发后,系统会收到物理外部中断,于是去读取物理plic的claim查看是哪个设备发送了中断。得知是串口后,便读取物理串口信息,并将其放入虚拟串口队列,并唤醒等待在这个队列上的vcpu。

这个vcpu会不断读取串口队列,并将内容发送给虚拟串口对应的虚拟串口设备。虚拟设备经过处理后,调用虚拟plic向guest发起虚拟外部中断。

guest收到中断后,查询虚拟plic的claim,查看是哪个设备发起中断。guest得知是虚拟串口设备后,访问虚拟设备来获取信息。

在这种情况下,键盘输入被放入串口队列,并送给与控制台相绑定的模拟设备,进而送给绑定在控制台的guest。虽然多个guest都共享这个结构,但同一时间似乎只有一个guest能绑定控制台,类似于独占这个队列。

串口的输入约等于被独占,那么更复杂的设备呢?比如网卡等,要如何决定中断及其对应信息要发给哪个guest?多个guest如何复用这个物理网卡?